The goal is to predict how many Titanic passengers survive left using the Machine Learning model

To show the data visualization, to analyze the relationship between variables and the number of survival, and to predict between actual survival passengers and predicted survival passengers.

Through the Machine Learning approach, I made a Binary Classification model using Random Forest to predict data in the test set. I applied Feature Engineering and showed the Data Visualization to support my analysis

OVERVIEW

This project is a famous competition project in Kaggle. It is the Titanic project. In this project, I analyzed data from survivors of the sinking of the Titanic ship. This project is quite simple, just create a model that can predict whether a passenger has a chance of surviving or not based on certain variables.

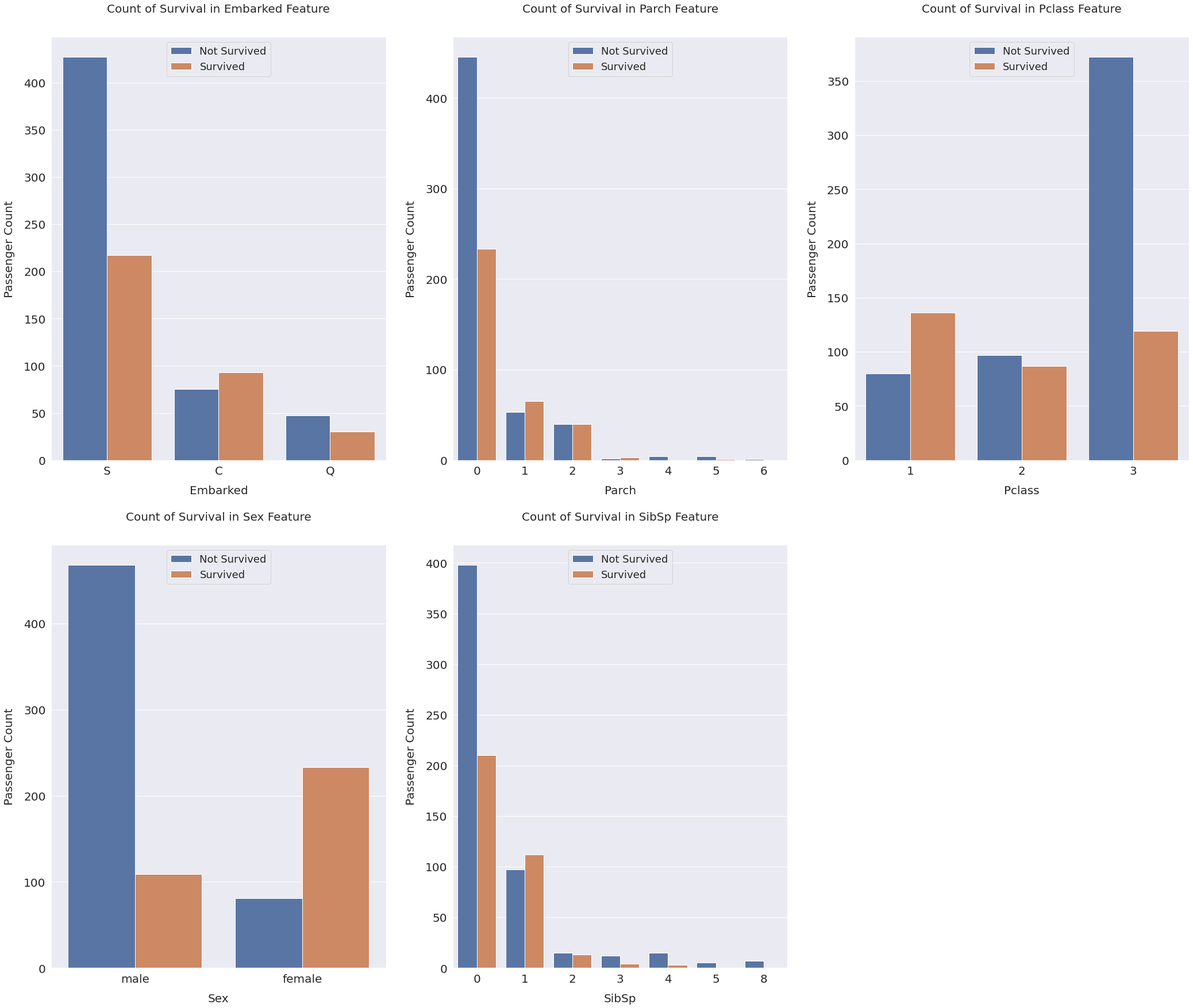

From the resulting plot, we can see it turns out that:

It can be seen on the graph that the more expensive the ticket, the more likely the person is to survive, starting at 56 and up. But the number of survivors starting at 10.5 has increased considerably, except for the incident that happened to the group of ticket holders costing 13–15,742.

Based on the graph, it can be seen that passengers in the younger age group tend to be less safe than children and the elderly. The passenger age group is dominated by the young age group, which is between the ages of 18–24, 24–30, and 30–37 years.

The plot explains that people with Family_Size 2,3,4 have a greater chance of surviving, the rest probability decreases, and people who go with Family_Size_Grouped Small is more likely to have a greater level of safety.

In the plot results, the explanation is the Family_Size_Grouped feature, at numbers 2, 3, and 4 the probability of survival increases.

The total sample on y_train and y_train_pred is 891 passengers. The results of the confusion matrix can be explained as follows:

Based on the pie chart above, there are 61.48% of passengers who are predicted not to survive and there are 38.52% of passengers who are predicted to survive.